What Problem Does This Solve?

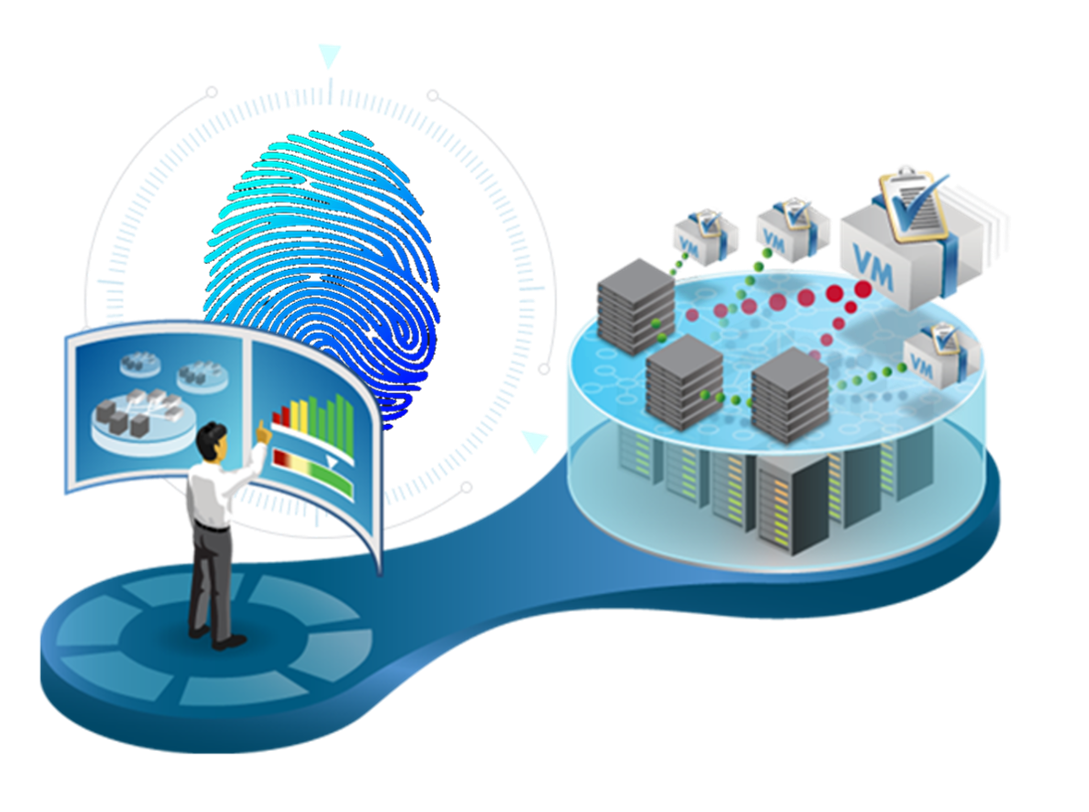

Data forensics, often used interchangeably with digital forensics, is essentially the study of digital data and how it is created and used for the purpose of an investigation. Data forensics is part of the greater discipline of forensics, in which various types of evidence are studied to investigate an alleged crime, to determine intent, identify sources (for example, in copyright cases), or authenticate documents.. often involving complex time-lines or hypotheses.

Digital forensics is the process of uncovering and interpreting electronic data. The goal of the process is to preserve any evidence in its most original form while performing a structured investigation by collecting, identifying and validating the digital information for the purpose of reconstructing past events.

Data forensics can involve many different tasks, including data recovery or data tracking and extraction of Personally Identifying Information (PII). Data forensics might focus on recovering information on the use of a mobile device, computer or other device. It might cover the tracking of phone calls, texts or emails through a network. Digital forensics investigators may also use various methodologies to pursue data forensics, such as decryption, advanced system searches, reverse engineering, or other high-level data analyses.

Some experts make a distinction between two types of data collected in data forensics. One is persistent data, which is permanently stored on a drive and is therefore easier to find. The other is volatile data, or data that is transient and elusive. Data forensics often focuses on volatile data, or on a mix of data that has become difficult to recover or analyze for some reason. In other cases, data forensics professionals focus on persistent data that is easy to come by but must be assessed in depth in order to prove criminal intent.

Digital forensics is the process of uncovering and interpreting electronic data. The goal of the process is to preserve any evidence in its most original form while performing a structured investigation by collecting, identifying and validating the digital information for the purpose of reconstructing past events.

Data forensics can involve many different tasks, including data recovery or data tracking and extraction of Personally Identifying Information (PII). Data forensics might focus on recovering information on the use of a mobile device, computer or other device. It might cover the tracking of phone calls, texts or emails through a network. Digital forensics investigators may also use various methodologies to pursue data forensics, such as decryption, advanced system searches, reverse engineering, or other high-level data analyses.

Some experts make a distinction between two types of data collected in data forensics. One is persistent data, which is permanently stored on a drive and is therefore easier to find. The other is volatile data, or data that is transient and elusive. Data forensics often focuses on volatile data, or on a mix of data that has become difficult to recover or analyze for some reason. In other cases, data forensics professionals focus on persistent data that is easy to come by but must be assessed in depth in order to prove criminal intent.

Data Lineage

Data lineage is generally defined as a kind of data life cycle that includes the data's origins and where it moves over time. This term can also describe what happens to data as it goes through diverse processes. Data lineage can help with efforts to analyze how information is used and to track key bits of information that serve a particular purpose.

One common application of data lineage methodologies is in the field of business intelligence, which involves gathering data and building conclusions from that data. Data lineage helps to show, for example, how sales information has been collected and what role it could play in new or improved processes that put the data through additional flow charts within a business or organization. All of this is part of a more effective use of the information that businesses or other parties have obtained.

Another use of data lineage, as pointed out by business experts, is in safeguarding data and reducing risk. By collecting large amounts of data, businesses and organizations are exposing themselves to certain legal or business liabilities. These relate to any possible security breach and exposure of sensitive data. Using data lineage techniques can help data managers handle data better and avoid some of the liability associated with not knowing where data is at a given stage in a process.

Thematic Analysis

If you can describe it, we can query it. Know SQL and Javascript? Then you already know how to use the platform. Whatever the format, a powerful query language lets you work with structured or unstructured data, speeding up development and simplifying deployment for unmatched data agility.

A rich Data Virtualization layer links file systems, Web servers, relational databases, No-SQL and Big Data storage; allowing them to be queried and joined to any other data. Virtual collections let you integrate data from any source and easily load it into memory for fast processing and analysis.

A rich Data Virtualization layer links file systems, Web servers, relational databases, No-SQL and Big Data storage; allowing them to be queried and joined to any other data. Virtual collections let you integrate data from any source and easily load it into memory for fast processing and analysis.

Topic Modeling + Key Phrases

We know you're busy. The data fabric is purpose-built to reduce the burden on support teams. Cognitive Technology features for

AI Ops

let you automate administrative tasks and engage teams globally to solve problems, reduce down time and accelerate problem resolution.

Collect and store critical metrics to monitor operations in real-time. Define Triggers and Task Lists, that react to KPI changes, application data, CPU, memory usage, and resource availability. Log and machine data can be automatically classified, analyzed for context or relevance and easily integrated into Call Center applications to automate support decisions.

Collect and store critical metrics to monitor operations in real-time. Define Triggers and Task Lists, that react to KPI changes, application data, CPU, memory usage, and resource availability. Log and machine data can be automatically classified, analyzed for context or relevance and easily integrated into Call Center applications to automate support decisions.

Synonymy, Hypernymy or Similarity

StreamScape's cognitive technology makes use of

Natural Language Processing

and

Classification

to discover meaning and context in data by analyzing language and sentence structure. Microflows and Data Integration services make it easy to import data and automate model training.

Thematic Analysis services let users easily collect and analyze text, document, image or audio data to understand concepts, opinions, or experiences and gain deeper insight into problems. This type of analysis is considered interpretative, as it is shaped and informed by domain specific knowledge, concepts and terminologies.

Thematic Analysis services let users easily collect and analyze text, document, image or audio data to understand concepts, opinions, or experiences and gain deeper insight into problems. This type of analysis is considered interpretative, as it is shaped and informed by domain specific knowledge, concepts and terminologies.

Feature Engineering

Enterprise grade, integrated security keeps your data safe. Entity or column-level Authorization, Group, Organization and API security protects critical information against unwanted access and data co-mingling.

Expose functions, queries and AI services as fully documented API, powered by the industry's leading Open API framework. Develop Real-Time, AI powered applications that integrate documents, web or application data from any source.

Connect to the platform using any language, Web application, OData compliant systems, XMPP or JDBC clients, Microsoft Office products, Reporting and Data Visualization tools.

Expose functions, queries and AI services as fully documented API, powered by the industry's leading Open API framework. Develop Real-Time, AI powered applications that integrate documents, web or application data from any source.

Connect to the platform using any language, Web application, OData compliant systems, XMPP or JDBC clients, Microsoft Office products, Reporting and Data Visualization tools.

Download a full version of the

Data Fabric

Data Fabric